Greetings readers! With the medium of this article, I'll teach you some nice techniques to enumerate ports 80 & 443 i.e HTTP & HTTPS respectively; with sanguine expectations that you'd actually like some of them. Before I begin, I would like to list a few minor pre-requisites for better understanding.

- Understanding of how HTTP works; and

- How to use Nmap

If you are unfamiliar with the pre-requisites I've mentioned, then I highly recommend you to first check about them, however, you can still proceed anyway. Now then, let's begin!

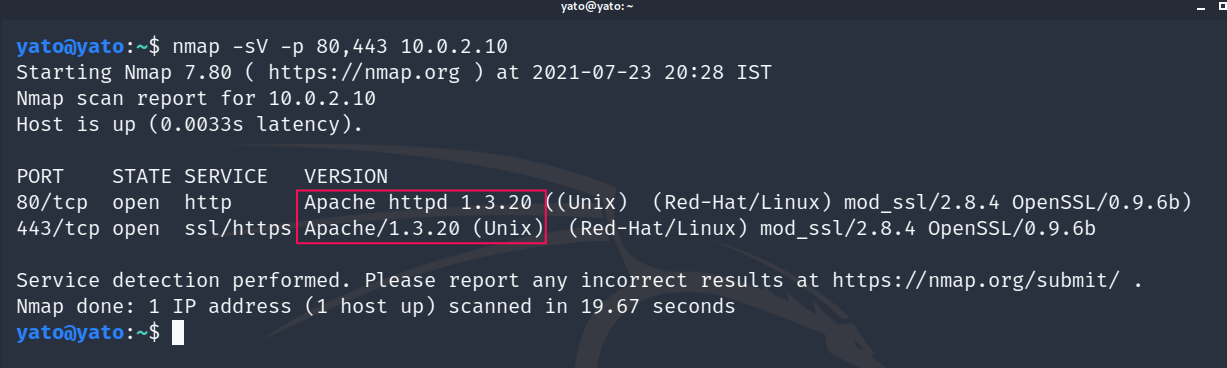

Identifying The Web Server

The first and foremost step is to discover if the webserver is running, and what service versions are being used. HTTP server runs on port 80 by default (and HTTPS on port 443), however, it can be configured to run on a far higher port, which we as pentesters, might miss.

Thus to discover the webserver and identify the service versions, we perform a simple Nmap scan, with the service version switch enabled.

nmap -sV -T4 target

WAF Detection

Once we ensure that the webserver is up and identify the services being used, the next step is to determine whether the webserver is behind a web application firewall or some other kind of threat prevention service. It is crucial to confirm the existence of WAFs, as they can filter out or block the requests we send and may hinder our pentest process. A simple and efficient way of doing so is to use the WAFW00F tool, which comes pre-built with kali.

wafw00f https://target

Identifying The Web Technologies

A simple, yet useful method. Identifying the web technologies will help you in multiple ways. Say if you find an outdated plugin or script being used then you can directly find exploits for it. Assume you find an upload utility on a webpage, and you think of uploading a payload instead of the intended file or document. Now if you don't know what backend language is running, you cannot possibly find the right payload for the job.

To enumerate the web technologies, we can use whatweb tool on kali, or an interactive website like netcraft.

Reviewing The Source-Code

Code-Gazing is a good practice and you should incorporate this is in your habit. Developers often leave sensitive information in form of comments, which may be useful for an attacker, or can even be a cause of information disclosures. While reviewing source code, you'll often found user credentials, login pages, admin panel, links leading to hidden files and directories within the webserver etc.

Here I've attached a screenshot of a website's source code, in which the developer had followed a poor practice of hardcoding a blacklist based form filter, which can be easily bypassed with characters that aren't blacklisted.

The Robot File and Sitemap

Almost every website have a robots.txt file, where the scope for crawlers is defined. Directories and Files disallowed in the robot files are not crawled by search engine bots, and hence are not indexed. These unindexed directories are where the sensitive content is often placed. In real-life applications, these directories are forbidden to be accessed by non-admin users, however, it is common to find something of value in robot files in Capture The Flag events. A good practice is, however, to enumerate as much as you can.

The sitemap resembles an actual physical map, in which the content of websites is indexed in an arranged manner, which helps the search bots to index the content on search engines. If found, it can land us into a hidden directory, which we wouldn't have otherwise.

Directory Brute-forcing

In the previous steps, I've mentioned "hidden content" and "sensitive directories" multiple times. These are the directories that you can't access by simply navigating around the web pages. Now if you are wondering why are they sensitive - Because the developers and admins do not expect that someone will visit them, since they aren't listed anywhere on the webserver. This is where directory brute-forcing comes into play. You can utilize multiple tools to achieve this objective, I'll be demonstrating dirbuster here.

NOTE: This is not a how-to guide on tools, the purpose of this article is to help you build a methodology for enumerating web servers.

Utilising NSE-Scripts

After looking around, and discovering something of value, we can utilise some cool Nmap scripts. Nmap has many HTTP enumeration scripts to offer, some of which I've listed out:

- http-enum.nse

- http-methods.nse

- http-waf-detect.nse

Automated Vulnerability Assessments

At some point in time, you will realise the need for automation. We can utilise multiple tools and frameworks to look for vulnerabilities on a web server. A simple scan can be done with nikto (used here), another built-in kali tool. You can use tools specific to services or CMSs, like wpscan, which is solely developed to look for vulnerabilities on wordpress.

A simple nikto scan can be done with:

nikto -h target.com

EndNote

Enumeration is not a static process, and cannot be learnt with a few tools and techniques, here I listed few methods which I use myself and might assist you in building an enumeration methodology. The topics mentioned are the implication of what you can do, and not what you should do; so learn something new every day and add new tricks to your arsenal. If you had a good time reading my content and learnt anything new out of it, consider dropping a comment; might as well follow me on Twitter.

Here you can find a http-enum cheatsheet by The XSS-Rat (available for free, not pirated).

.png)

.png)

.png)

.png)

.png)

.png)